SSD Mobilenet V2 on custom dataset:

- Chocky _18

- Aug 23, 2022

- 6 min read

SSD Mobilenet V2 is a one-stage object detection model which has gained popularity for its lean network and novel depthwise separable convolutions. It is a model commonly deployed on low compute devices such as mobile (hence the name Mobilenet) with high accuracy performance.

Original Model

Classifier, name: detection_classes. Contains predicted bounding-boxes classes in a range [1, 91]. The model was trained on Common Objects in Context (COCO) dataset version with 91 categories of objects, 0 class is for background. Mapping to class names provided in <omz_dir>/data/dataset_classes/coco_91cl_bkgr.txt file.

Probability, name: detection_scores. Contains probability of detected bounding boxes.

Detection box, name: detection_boxes. Contains detection boxes coordinates in format [y_min, x_min, y_max, x_max], where (x_min, y_min) are coordinates of the top left corner, (x_max, y_max) are coordinates of the right bottom corner. Coordinates are rescaled to input image size.

Detections number, name: num_detections. Contains the number of predicted detection boxes.

Converted Model

The array of summary detection information, name: DetectionOutput, shape: 1, 1, 100, 7 in the format 1, 1, N, 7, where N is the number of detected bounding boxes. For each detection, the description has the format: [image_id, label, conf, x_min, y_min, x_max, y_max], where:

image_id - ID of the image in the batch

label - predicted class ID in range [1, 91], mapping to class names provided in <omz_dir>/data/dataset_classes/coco_91cl_bkgr.txt file

conf - confidence for the predicted class

(x_min, y_min) - coordinates of the top left bounding box corner (coordinates are stored in a normalized format, in a range [0, 1])

(x_max, y_max) - coordinates of the bottom right bounding box corner (coordinates are stored in a normalized format, in a range [0, 1])

Training model on Custom Dataset(Helmets):

Data Acquisition & Image Tagging

The journey of training an object detection model begins with data acquisition.

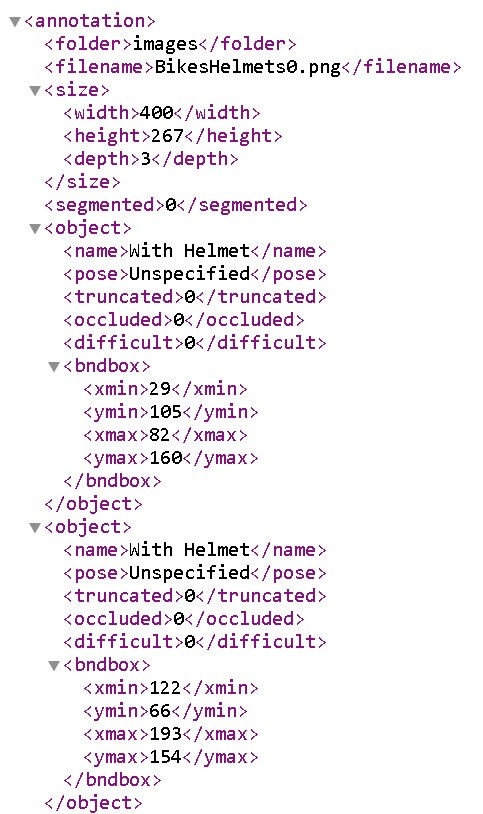

Once the data is acquired, the images in the dataset need to be tagged. Image tagging was completed using the LabelImg software, which is an open-source python-based implementation of a system that tags the bounding boxes and records them in an XML file.

A sample XML file is shown below for reference:

Preparing dataset

With the image acquisition and tagging done. The output generated from the tagged image dataset is an XML file containing the bounding box coordinates of the hand-tagged images. However, neural net models cannot parse XML files and require a specific format called Tensor Records. Hence the next set of code involves the conversion of the XML file to an intermediate CSV output and then the final conversion of the CSV output to TF-Records.

import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET

def xml_to_csv(path):

xml_list = []

classes_names = []

for xml_file in glob.glob(path + '/*.xml'):

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.find('size')[0].text),

int(root.find('size')[1].text),

member[0].text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text)

)

xml_list.append(value)

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

classes_names = list(set(classes_names))

classes_names.sort()

return xml_df, classes_names

def main():

for directory in ['train', 'test']:

print(directory)

image_path = os.path.join(os.getcwd(), 'research/object_detection/images/{}'.format(directory))

xml_df, classes = xml_to_csv(image_path)

print(os.getcwd())

xml_df.to_csv('research/object_detection/data/{}_labels.csv'.format(directory), index=None)

print('Successfully converted xml to csv.')

main()The CSV file output for both the train and validation dataset is then converted to TF-Records format using the below code.

There are several things to take note of over here. While creating the TF-Records file, the label map needs to be generated. The label map is essentially an encoder for the classes to be trained as the neural net predicts only in numbers after the final sigmoid layer. In our case, since we are training for "With helmet" and "Without Helmet", there are classes 1 and 2.

# Convert csv to tf record files - To switch to a code based version so that the sys.exit dont take place.

from __future__ import division

from __future__ import print_function

from __future__ import absolute_import

import os

import io

import pandas as pd

import tensorflow as tf

from PIL import Image

from object_detection.utils import dataset_util

from collections import namedtuple, OrderedDict

"""

Usage:

# From tensorflow/models/

# Create train data:

python Generate_TF_Records.py --csv_input=data/train_labels.csv --output_path=train.record

# Create test data:

python generate_tfrecord.py --csv_input=data/test_labels.csv --output_path=test.record

"""

#flags = tf.compat.v1.flags

#flags.DEFINE_string('csv_input', '', 'Path to the CSV input')

#flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

#flags.DEFINE_string('image_dir', '', 'Path to images')

#LAGS = flags.FLAGS

# TO-DO replace this with label map

def class_text_to_int(row_label):

if row_label == 'With Helmet':

return 1

else:

return 2

def split(df, group):

data = namedtuple('data', ['filename', 'object'])

gb = df.groupby(group)

return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)]

def create_tf_example(group, path):

print(path)

print(group.filename)

with tf.compat.v1.gfile.GFile(os.path.join(path, '{}'.format(group.filename)), 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = Image.open(encoded_jpg_io)

width, height = image.size

filename = group.filename.encode('utf8')

image_format = b'jpg'

xmins = []

xmaxs = []

ymins = []

ymaxs = []

classes_text = []

classes = []

for index, row in group.object.iterrows():

xmins.append(row['xmin'] / width)

xmaxs.append(row['xmax'] / width)

ymins.append(row['ymin'] / height)

ymaxs.append(row['ymax'] / height)

classes_text.append(row['class'].encode('utf8'))

classes.append(class_text_to_int(row['class']))

tf_example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(filename),

'image/source_id': dataset_util.bytes_feature(filename),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature(image_format),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmins),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymins),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

}))

return tf_example

def main(_):

for directory in ['train', 'test']:

output_path = os.path.join(os.getcwd(), 'research/object_detection/data/{}.record'.format(directory))

print(output_path)

input_path = os.path.join(os.getcwd(), 'research/object_detection/data/{}_labels.csv'.format(directory))

print(input_path)

writer = tf.io.TFRecordWriter(output_path)

print(os.getcwd())

path = os.path.join(os.getcwd(), 'research/object_detection/images/{}'.format(directory))

print(path)

examples = pd.read_csv(input_path)

grouped = split(examples, 'filename')

for group in grouped:

tf_example = create_tf_example(group, path)

writer.write(tf_example.SerializeToString())

writer.close()

#output_path = os.path.join(os.getcwd(), FLAGS.output_path)

print('Successfully created the TFRecords: {}'.format(output_path))

if __name__ == '__main__':

tf.compat.v1.app.run()With the TF record files generated, the next stage involves the creation of the object detection text file. The object detection text file will be referred to by the model to determine the text on the bounding box and needs to correspond to the item label. For the tree detection problem statement, the object detection text file is shown below:

item {id: 1

name: 'With Helmet'}

item {id: 2

name: 'Without Helmet'}With this, the data preparing stage is complete in the model building.

Configuring Training

Configuration of the training involves the selection of the model as well as model parameters such as batch size, number of steps, training, and testing TF-Records directory. Thankfully, TensorFlow’s object detection pipeline comes with a pre-configured file that optimizes most of the configuration selection for efficient model training. We can download this configuration file by using the below command.

!wget https://raw.githubusercontent.com/tensorflow/models/master/research/object_detection/configs/tf2/ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8.config

print(os.getcwd())

base_config_path = 'ssd_mobilenet_v2_fpnlite_320x320_coco17_tpu-8.config'If we take a look at the configuration file, there are several parameters that are required to be updated. Firstly, we need to configure the path to the label map or the object detection text file that we created earlier so that the model is aware of the label to place on the image once detected. Next, we need to provide the path to the training and testing TF-Records files. The number of classes is set as 2 and a default batch size of 4 was set for the training. The number of training steps, which refers simply to the number of training epochs is then set to 1000. The SSD Mobilenet V2 model is then downloaded and the location of the checkpoint file is also incorporated in the config file.

Training, Monitoring, and testing

To simply start training the model, run the below code which will initiate the training pipeline in TensorFlow.

!python model_main_tf2.py \

--pipeline_config_path={pipeline_config_path} \

--model_dir={model_dir} \

--alsologtostderr \

--num_train_steps={num_steps} \

--sample_1_of_n_eval_examples=1 \

--num_eval_steps={num_eval_steps}to test the model, the following code can be run which includes loading the newly trained model.

import tensorflow as tf

tf.keras.backend.clear_session()

model_dir = pathlib.Path('dir')

model = tf.saved_model.load(str(model_dir),None)

print(model)Next, with the model loaded, the inference can be performed by running the following code on all the test images.

def run_inference_for_single_image(model, image):

image = np.asarray(image)

# The input needs to be a tensor, convert it using `tf.convert_to_tensor`.

input_tensor = tf.convert_to_tensor(image)

# The model expects a batch of images, so add an axis with `tf.newaxis`.

input_tensor = input_tensor[tf.newaxis,...]

# Run inference

model_fn = model.signatures['serving_default']

output_dict = model_fn(input_tensor)

# All outputs are batches tensors.

# Convert to numpy arrays, and take index [0] to remove the batch dimension.

# We're only interested in the first num_detections.

num_detections = int(output_dict.pop('num_detections'))

output_dict = {key:value[0, :num_detections].numpy()

for key,value in output_dict.items()}

output_dict['num_detections'] = num_detections

# detection_classes should be ints.

output_dict['detection_classes'] = output_dict['detection_classes'].astype(np.int64)

# Handle models with masks:

if 'detection_masks' in output_dict:

# Reframe the the bbox mask to the image size.

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

output_dict['detection_masks'], output_dict['detection_boxes'],

image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(detection_masks_reframed > 0.5,

tf.uint8)

output_dict['detection_masks_reframed'] = detection_masks_reframed.numpy()

return output_dictfor image_path in glob.glob('images/test/*.jpg'):

image_np = load_image_into_numpy_array(image_path)

output_dict = run_inference_for_single_image(model, image_np)

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks_reframed', None),

use_normalized_coordinates=True,

line_thickness=8)

display(Image.fromarray(image_np))

Comments